Nerds love discussing their backupstrategies, so I thought I give it a try.

Innovative Companies Build on B2 Cloud Storage Organizations around the world choose Backblaze to solve for their use cases while improving their cloud OpEx vs. Amazon S3 and others. Organizations around the world choose Backblaze to solve for their use cases while improving their cloud OpEx vs. Amazon S3 and others. Backup & Archive. Store securely to the cloud including safeguarding. Data on VMs, servers, NAS, and computers. Content Delivery. You can create a Windows VM on FreeNAS and then mount your data into the VM. Make it look as like internal drive letter and Backblaze will accept it. A simple way to make it look like an internal drive is to use the Dokany mirror driver.

Goals

At a high-level, I I have a few goals for my backup strategy.

- Avoid permanent data loss. Permanent data loss is the absolute worst case scenario and it must be avoided at all costs. I fully intended to never lose a single picture in my photo library, a note in my note archive, or any other file.

- Avoid bit-rot. As happy as I am with the Apple ecosystem in general, I'm disappointed that APFS chose not to implement data checksumming, and that I can't buy a Macbook with ECC RAM. Thus, to avoid bit-rot, long-term data storage should be done on systems which do offer protection against corruption of data over time.

- Maintain High-Availability. As an independent software contractor, I can't just run to the IT department and request a new workstation when the drive in my laptop dies—I am the IT department. So, in-order to uphold the expectations of my clients, there should never be a day where I unexpectedly can't work due to a hardware failure.

- Maintain Security. I may choose to trust a vendor with storing data, but I don't want to have to trust them to not read it. All data should be encrypted with keys I manage before it leaves my control. Additionally, I'd rather not trust the vendor's bespoke backup client to perform this encryption or key management for me. This rules out most cloud backup providers.

So your Backblaze data might rot away given time. You have to do all the checksumming and check the data you get back from Backblaze to see if it has been altered. Half a exabyte will have even more bit rot. I dont understand why Backblaze abandon their Linux hardware raids, and switch to ZFS which does automatic checksumming and protects the data? ZFS distributes the writes across the top level vdevs. If the file is small enough it won't make it across all of the vdevs. Of course during a normal txg commit you will likely have enough writes to write to all the vdevs concurrently.

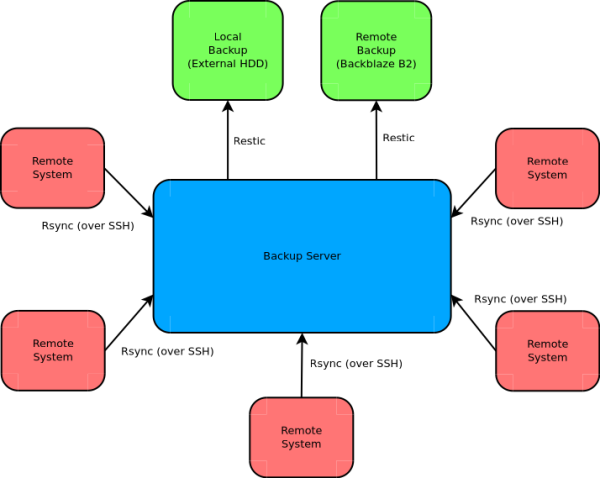

Overview

Which brings me to my actual backup strategy:

- Galactica[1], my main workstation, does a daily SuperDuper clone to Atlantia, an external USB SSD.

- Galactica and Prometheus (my wife's laptop) both automatically run network Time Machine backups to RAIDZ datasets on Gemini, a FreeNAS server.

- Galactica uses Vorta to perform periodic Borg backups to another dataset on Gemini.

- Gemini periodically performs Borg backups of Pegasus (general NAS volume) to a dataset on another ZFS pool.

- After a Borg backup finishes, Gemini runs a cloud sync task to sync the Borg repository to a bucket on Backblaze B2.

Threat Model

All of this aims to fill the needs I described at the outset. Specifically, here are the failure modes I've thought through.

| Scenario | Action |

|---|---|

| Macbook SSD dies on a work day. | Boot from SuperDuper clone and continue working. |

| Macbook hardware failure (other than SSD) | Get old Macbook from closet (was primary before current workstation) and boot from SuperDuper clone. |

| Need to provision a new Macbook to replace the dead one. | Either (1) restore from SuperDuper clone or (2) restore from Time Machine. |

| Discover corrupted files on Macbook's drive. | Either (1) restore from Time Machine or (2) restore from Borg repository. |

| Gemini's RAIDZ2 (data) loses 1 or 2 drives. | Replace drives and re-silver. No data loss. |

| Gemini's RAIDZ2 (data) loses 3 or more drives. | Replace drives and restore from Borg backup. |

| Gemini's RAIDZ (data2) loses 1 drive. | Replace drive and resilver. No data loss. |

| Gemini's RAIDZ (data2) loses 2 or more drives. | Replace drives and restore from B2's version of the Borg backup. |

| Ransomware attack encrypts Galactica. | Restore from SuperDuper clone. |

| Ransomware attack encrypts Galactica and Atlantia. | Restore from Time Machine. |

| Ransomware attack encrypts Galactica, Atlantia, and all Gemini volumes. | Restore Gemini's ZFS pools from the last good snapshot, then restore Galactica. |

| House burns down and takes Galactica, Atlantia, and Gemini with it. | Buy new house and Macbook, restore from B2's version of the Borg backup. |

| Nuclear attack takes out my house as well as the B2 datacenter. | Dataloss occurs, but I'm most likely dead also and, thus, don't care. |

| Backblaze becomes untrustworthy and starts reading my data. | They don't have the keys because it was encrypted by Borg. |

| Borg becomes untrustworthy and encrypts data in a flawed way. | Borg doesn't have access to my data since it's stored on B2. |

| Someone working for Backblaze purposefully alters Borg's (open-source) code to break their encryption, whilst also having access to my stored data. | They gain access to my data. But, this seems exceedingly unlikely. |

This strategy upholds my goals.

- Security is upheld because no one gets both the data and the keys.

- Bit-rot is mitigated by ZFS data checksumming.

- Ransomware attacks are mitigated by multiple backups and ZFS snapshots.

- Availability is upheld by bootable SuperDuper clones and by keeping around a last-gen laptop.

- Data loss is mitigated by drive redundancy in Gemini; by using multiple types of backup software, at least one of which is open-source; and by moving some backups offsite.

So, barring nuclear war and/or some sort of Nation State attack on the Borg project, I don't plan on losing any data anytime soon.

Yes, all of my computers and hard drives are named after ships in the Battlestar Galactica flotilla. ↩︎

If you create an Application Key and are not using the BackBlaze Master Application Key, the application key must have all privileges (Read and Write) for the targeted bucket.

Click on 'Create a Bucket'. Give your bucket a unique name, and choose 'private'.

- Specify 'Destination Type' as 'Backblaze'.

- Add a name for your destination.

- Fill in the Account ID (keyID), Application Key and Bucket name that you have just created.

- Click the 'save' button.

Destination Name

A generic name for your internal ease of use, so it will be easier to recognize the backup destination roll, in case you have more than one. Max length is 40 characters.

Backup Directory

This will be our starting point when accessing this destination.

Make sure to not start with '/'.

Write your path to where you want the backup to be stored.

You can leave the backup directory empty if you want that the backup will be stored in the main directory.

Free Disk Space Limit

This option checks if the destination's disk space has reached the specified limit before it executes the backup. When enabled, JetBackup will not perform the backup when the used disk space is over the specified limit.

Account ID

Backblaze Zfs

The identifier for the account.

Application Key

The account Application Key.

Bucket

In order to create a bucket using BackBlaze console go to Creating a Bucket in the Backblaze B2 Cloud Storage Buckets.

Upload Retries

Backblaze B2 Zfs

If the upload fails for some reason retry upload x times.